GPUs, TPUs, NPUs

GPU is not being used

Please ensure you have the NVIDIA CUDA drivers installed:

- Install the CUDA 11.7 Drivers

- Install the CUDA Toolkit 11.7.

- Download and run our cuDNN install script.

Inference randomly fails

Loading AI models can use a lot of memory, so if you have a modest amount of RAM on your GPU, or on your system as a whole, you have a few options

- Disable the modules you don't need. The dashboard (http://localhost:32168) allows you to disable modules individually

- If you are using a GPU, disable GPU for those modules that don't necessarily need the power of the GPU.

- If you are using a module that offers smaller models (eg Object Detector (YOLO)) then try selecting a smaller model size via the dashboard

Some modules, especially Face comparison, may fail if there is not enough memory. We're working on meaking the system leaner and meaner.

You have an NVIDIA card but GPU/CUDA utilization isn't being reported in the CodeProject.AI Server dashboard when running under Docker

Please ensure you start the Docker image with the --gpus all parameter:

How to downgrade CUDA to 11.8

If you're on Windows and having issues with your GPU not starting, but your GPU supports CUDA and you have CUDA installed, make sure you are running the correct CUDA version.

Open the command prompt and type

On Windows, we recommend running CUDA 11.8. If you are not running CUDA 11.8, un-install your version of CUDA then download and install CUDA 11.8: https://developer.download.nvidia.com/compute/cuda/11.8.0/local_installers/cuda_11.8.0_522.06_windows.exe

CUDA not available

If have an NVIDIA card and you're looking in the CodeProject.AI Server logs and see:

You can check to see what version of CUDA you're running by opening a terminal and running:

then:

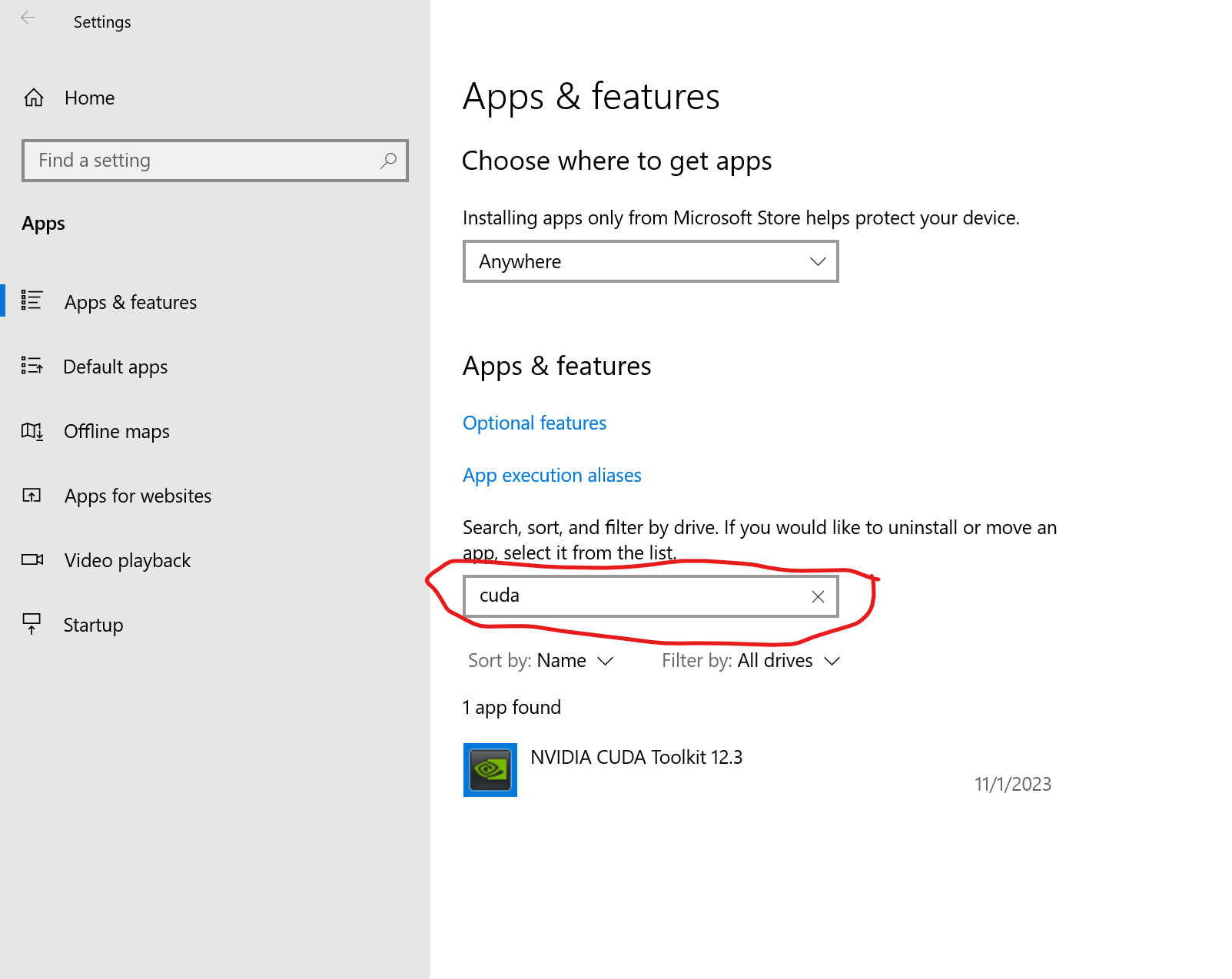

If you see nvcc --version is unknown, you may not have CUDA installed. You can confirm this by going Windows Settings, then Apps & features and searching for "CUDA" and see what comes up. If CUDA is installed, you will see the following: